Publication Information

ISSN 2691-8803

Frequency: Continuous

Format: PDF and HTML

Versions: Online (Open Access)

Year first Published: 2019

Language: English

| Journal Menu |

| Editorial Board |

| Reviewer Board |

| Articles |

| Open Access |

| Special Issue Proposals |

| Guidelines for Authors |

| Guidelines for Editors |

| Guidelines for Reviewers |

| Membership |

| Fee and Guidelines |

|

Algorithmic Personalization and Military Narratives: Analyzing Croatian Social Media Strategies

Major Marija Gombar*

Centre for Defence and Strategic Studies “Janko Bobetko”

PhD student, University North, Media and Communication

Received Date: December 27, 2024; Accepted Date: January 27, 2025; Published Date: January 31, 2025;*Corresponding author: Marija Gombar, Centre for Defence and Strategic Studies “Janko Bobetko”; PhD student, University North, Media and Communication; E-mail: gombar.ma@gmail.com, magombar@unin.hr; Phone: +385989709793

Citation: Marija G (2025) Algorithmic Personalization and Military Narratives: Analyzing Croatian Social Media Strategies; Adv Pub Health Com Trop Med: MCADD-119.

DOI: 10.37722/APHCTM.2024206

Abstract

This study examines how social media algorithms influence public perceptions of military service through narrative curation and user engagement. Employing qualitative methods, including content analysis and case studies of Croatian military campaigns, the research identifies how platforms like TikTok, Instagram, and Facebook amplify themes of patriotism, personal growth, and camaraderie while systematically suppressing dissenting viewpoints. Findings highlight the dual nature of algorithmic curation: while enhancing engagement, these systems risk creating ideological silos and limiting democratic dialogue. TikTok engages younger audiences through short, motivational content, while Instagram fosters collective identity through visual storytelling; however, both contribute to filter bubbles, restricting exposure to alternative perspectives. This study situates Croatian military campaigns globally, comparing them to strategies in the United States and Israel, and underscores the need for algorithmic transparency and inclusivity. Practical recommendations include algorithmic audits, thematic diversification, and measures to promote narrative diversity to mitigate polarization and foster democratic discourse. By addressing algorithm-mediated communication’s ethical and societal implications, this research contributes to broader discussions on algorithmic governance and the societal impacts of personalized content.

Keywords: algorithmic governance; algorithmic personalization; digital ecosystems; filter bubbles; echo chambers: military communication strategies; social media narratives.

Introduction

In the era of algorithmic governance, social media platforms have transformed from simple communication tools into potent mediators of digital public opinion. These platforms significantly influence various societal domains, shaping politics, culture, and military narratives. The algorithms governing these platforms determine content visibility, reach, and influence, creating personalized information ecosystems that impact individual perceptions and collective realities (Gillespie, 2014; Bucher, 2018; Zuboff, 2019). This study critically examines the intersection of algorithmic mechanisms and military narratives, focusing on how social media shapes public understanding of military service.

Historically, military marketing has been crucial in constructing national identity and shaping public perceptions of service. Traditional narratives have employed symbols of patriotism, camaraderie, and self-improvement to attract recruits and reinforce institutional legitimacy. However, these narratives are no longer conveyed solely through traditional media in the digital age. Instead, they are curated and amplified by algorithms prioritising content based on user interactions and platform-driven incentives (DeVito, 2020). This shift raises critical questions: How does algorithmic personalization shape perceptions of military service? To what extent do echo chambers promote ideological uniformity? What are the broader societal implications of these dynamics? Existing scholarship highlights the dual nature of algorithms. On one hand, they enhance user engagement by tailoring content to individual preferences. On the other hand, they perpetuate “filter bubbles” that limit exposure to diverse perspectives and reinforce existing beliefs (Pariser, 2011; Noble, 2018; Helberger et al., 2018). This study situates itself within the broader global challenge of fostering ethical algorithmic systems, particularly in the context of their role in shaping civic discourse, inclusivity, and societal trust in democratic institutions.

Within military marketing, this study hypothesizes that algorithmic personalization amplifies favourable narratives while marginalizing dissenting voices, creating a mediated reality where military service appears universally aspirational and rewarding.

This research aims to deepen our understanding of how technology, culture, and public opinion interact in the digital age. In an era where algorithmic systems govern much of our access to information, this research contributes to broader interdisciplinary discussions on digital ethics, algorithmic governance, and societal impacts of personalized content curation. The interplay between algorithms, public narratives, and institutional goals is not just a technical issue; it is a cultural and political challenge with implications for inclusivity, transparency, and the democratization of public discourse. By examining Croatian military campaigns, this study offers insights relevant to global debates about how digital platforms shape collective perceptions and influence social cohesion.

It emphasizes the ethical implications of algorithmic curation, mainly the systematic exclusion of dissenting voices, which poses significant risks to democratic dialogue and societal inclusivity (Sunstein, 2001; Gillespie, 2014). A qualitative approach will be employed, integrating content analysis, critical discourse analysis (CDA), and case studies to investigate the construction, dissemination, and reception of military narratives within algorithmically mediated environments. While the primary focus is on Croatian military campaigns, the findings will reflect global trends in military communication strategies, underscoring the widespread reliance on digital platforms to shape institutional narratives (Davis, 2019; Abidin, 2021).

A distinctive feature of contemporary social media is its ability to deliver highly targeted content to specific demographic groups, aligning messages with their values and interests. However, this capability often results in echo chambers that amplify dominant narratives—such as patriotism, camaraderie, and personal growth—while filtering out alternative perspectives (Bakshy et al., 2015). This dynamic raises urgent questions: How does content personalization influence younger audiences’ attitudes toward military service? What are the implications of algorithmically mediated narratives for democratic dialogue and the visibility of dissenting viewpoints? While algorithms improve communication efficiency, they also risk exacerbating polarization and limiting the diversity of public discourse (Dubois and Blank, 2018).

Algorithmic personalization significantly shapes individualized information ecosystems (Gillespie, 2014; Bucher, 2018). While this customization can enhance user engagement by providing tailored content, it also poses the risk of creating “filter bubbles” (Pariser, 2011). These filter bubbles may limit the variety of perspectives users encounter and reinforce their existing beliefs. In the context of military marketing, algorithmic personalization targets specific demographic groups with carefully curated narratives. Social media platforms like TikTok and Instagram focus on military-themed content that resonates with younger audiences, highlighting personal growth, camaraderie, and adventure themes. For instance, Instagram leverages its visual format to showcase emotionally compelling imagery, while TikTok’s algorithm promotes short, aspirational videos designed to boost user engagement. However, these practices raise ethical concerns. Algorithms often lack transparency and accountability, operating as opaque systems that prioritize institutional objectives over the representation of dissenting voices (Tufekci, 2015). By marginalizing alternative viewpoints, algorithms create mediated realities that present military service as universally aspirational while obscuring its ethical and societal complexities.

The concept of echo chambers further critiques filter bubbles by examining how algorithmic curation and user behavior generate ideologically insulated environments. Within these feedback loops, users are repeatedly exposed to similar messages, reinforcing their beliefs and diminishing critical engagement (Sunstein, 2001).

Theoretical framework

This study is rooted in three interconnected concepts: algorithmic personalization, echo chambers, and the media’s construction of reality. Together, these frameworks provide a comprehensive lens to examine how digital infrastructures mediate, filter, and amplify military narratives. By investigating these mechanisms, the study explores how social media platforms influence public perceptions and shape individual and collective attitudes toward military service. While focusing on the Croatian military context, the theoretical implications extend to broader domains such as political communication, public health campaigns, and consumer marketing, underscoring the study’s interdisciplinary significance.

Algorithmic personalization is central to modern digital media. Algorithms curate content by analyzing user behaviours, preferences, and interactions, creating highly individualized information ecosystems (Gillespie, 2014; Bucher, 2018). This customization enhances user engagement by tailoring content to individual interests and risks, creating “filter bubbles” (Pariser, 2011). These bubbles limit the variety of perspectives available to users, reinforcing existing beliefs and reducing exposure to alternative viewpoints. In military marketing, algorithmic personalization allows for precisely targeting demographic groups with curated narratives. Platforms like TikTok and Instagram prioritize content that appeals to younger audiences, particularly themes of personal growth, camaraderie, and adventure. For example, Instagram’s visually focused format amplifies emotionally compelling imagery, while TikTok’s algorithm favours short, motivational videos that maximize engagement. However, these mechanisms raise ethical concerns. Algorithms often function as opaque systems prioritising institutional objectives over inclusivity, marginalizing dissenting voices (Tufekci, 2015). This selective amplification constructs mediated realities that portray military service as universally aspirational, obscuring its ethical and societal complexities.

The echo chamber concept builds on the filter bubble critique, examining how algorithmic curation and user behaviour foster ideologically insulated environments. In these feedback loops, users encounter similar messages repeatedly, reinforcing existing beliefs and diminishing critical engagement (Sunstein, 2001). Military marketing campaigns frequently benefit from this phenomenon, where emotionally resonant narratives—such as heroism, patriotism, and duty—dominate digital spaces, while counter-narratives are systematically excluded. For military campaigns, echo chambers present a dual-edged challenge. They amplify favourable narratives within targeted audience segments, fostering engagement and resonance. However, they also risk entrenching ideological silos, exacerbating polarization, and limiting exposure to diverse viewpoints (Dubois and Blank, 2018). This study explores the ethical implications of echo chambers, particularly their role in cultivating collective identity through emotional appeals while raising concerns about inclusivity and the suppression of dissent.

The media construction of reality framework highlights how media influence societal perceptions through selective framing and representation, rather than simply reflecting reality (Gerbner and Gross, 2017; Entman, 1993). This study expands upon Gerbner’s theories regarding the role of media in shaping reality by placing them within the context of the digital era, where algorithms actively influence not only what content is visible but also how societal narratives are framed and consumed. By combining these foundational concepts with contemporary algorithmic methods, this research creates a link between traditional media theories and their current applications in digital communication environments. This synthesis emphasizes the evolving interaction between human agency, institutional narratives, and technological mediation in shaping collective realities.

In digital contexts, the prioritization of algorithms intensifies these effects by promoting visually engaging and emotionally impactful content. Military marketing strategically utilizes this framework to create narratives focusing on personal growth, camaraderie, and national contribution. For instance, Croatian military campaigns often employ testimonial-style content, enriched with evocative imagery and language, to portray military service as transformative. This approach aligns with Entman’s (1993) framing theory, highlighting how selective emphasis shapes public understanding. However, it risks oversimplifying the complexities of military life, fostering unrealistic expectations among audiences, and excluding dissenting perspectives.

The interplay of algorithmic personalization, echo chambers, and media framing raises significant ethical concerns. While these mechanisms effectively engage audiences, they also amplify dominant narratives, reinforcing ideological silos and limiting the diversity of democratic discourse (Pariser, 2011; Gillespie, 2014). Moreover, the lack of transparency in algorithmic decision-making complicates accountability efforts, allowing platforms to exercise considerable control over which narratives are amplified or suppressed (Noble, 2018). To address these challenges, policymakers and platform designers must implement strategies that promote content diversity and balance emotionally resonant narratives with broader societal representation. Initiatives such as algorithmic audits, exposure diversity principles (Helberger et al., 2018), and transparency mechanisms can help mitigate polarization risks while fostering equitable digital ecosystems.

Methodology

This study employs a mixed-methods approach that integrates qualitative and quantitative techniques to examine how social media algorithms shape public perceptions of military service through personalization and the creation of echo chambers. Combining content analysis, critical discourse analysis (CDA), and case studies, the research investigates the construction, dissemination, and amplification of military narratives within algorithm-driven digital ecosystems. This methodological framework builds on established scholarship regarding media influence and algorithmic filtering (Gillespie, 2014; Bucher, 2018; Couldry and Hepp, 2017), ensuring a comprehensive and interdisciplinary understanding of these dynamics.

The content analysis decodes representations of military service across three major social media platforms: Facebook, Instagram, and TikTok. It incorporates visual semiotics to examine how imagery, tone, and symbolic references shape audience perceptions (Neuendorf, 2017). For instance, posts emphasizing patriotism often utilize national symbols and emotionally charged language, which algorithms tend to amplify due to higher engagement metrics (Meyer et al., 2019). This approach includes an analysis of detailed engagement metrics—likes, shares, comments, and reach—enabling a granular understanding of narrative prioritization across platforms.

The CDA, grounded in Fairclough’s (2003) framework, deconstructs rhetorical and semiotic strategies embedded in military-themed content. Sentiment analysis quantified user-generated comments, offering data-driven insights into how audiences interact with and perpetuate these narratives within algorithmically curated echo chambers. For this purpose, the Python library TextBlob was utilized to measure sentiment polarity, providing a quantitative foundation for complementing the qualitative deconstruction of dominant narratives. For instance, [tool] was used to measure sentiment polarity, complementing the qualitative deconstruction of dominant narratives.

The case studies focus on Croatian military marketing campaigns, tracing their lifecycle across platforms and comparing the tailoring and amplification of narratives on TikTok, Instagram, and Facebook. Engagement metrics such as reach, interaction rates, and sentiment distribution were analyzed to evaluate campaign effectiveness. Cross-platform comparisons revealed significant variability in algorithmic influence and personalization strategies targeting distinct demographic groups, such as younger TikTok audiences and older Facebook users.

Data collection spanned six months and involved scraping posts, advertisements, and user comments from publicly accessible sources. Tools like CrowdTangle and platform-specific APIs ensured comprehensive data collection, prioritizing posts exceeding 1,000 views to capture impactful content. Ethical considerations were rigorously addressed; all personal data were anonymized, and platform policies were adhered to in line with digital research guidelines (Markham and Buchanan, 2012).

Results

This section provides a detailed analysis of the findings, structured around the theoretical frameworks of algorithmic personalization, echo chambers, and the media’s construction of reality. TikTok, Instagram, and Facebook data highlight how algorithmic systems prioritize and amplify military-themed narratives. Four key themes—patriotism, personal growth, camaraderie, and adventure—anchor the analysis, revealing engagement patterns and their implications for digital public discourse. These results are examined through the lens of algorithmic amplification and narrative construction, underscoring the role of digital infrastructures in shaping collective perceptions.

As Couldry and Hepp (2017) argue, platforms are not neutral intermediaries but active agents in the mediated construction of reality—a point further supported by Gillespie (2014) and Bucher (2018). This analysis situates these dynamics within the broader context of algorithmic governance, engaging with recent scholarship on platform-driven informational ecosystems (van Dijck et al., 2013). Similarly, Bruns and Highfield (2015) emphasise how algorithmic infrastructures amplify polarising content, limiting exposure to diverse viewpoints and reinforcing ideological divides.

The results demonstrate a strong correlation between algorithmic prioritisation and user engagement. TikTok and Instagram have emerged as dominant platforms, amplifying personal growth and patriotism themes through visually dynamic and emotionally resonant content. These findings align with Tufekci’s (2015) insights into algorithmic amplification, illustrating how platform-specific affordances cater to younger audiences by emphasising aspirational narratives. In contrast, Facebook shows declining relevance, particularly among younger demographics, due to its text-heavy and less visually engaging content (Zuboff, 2019). King and Fielding (2023) similarly observed that TikTok’s focus on short-form video content consistently outperforms platforms like Facebook, where text-heavy posts resonate less with younger audiences. Taylor (2022) further underscores that algorithmic amplification of institutional narratives often operates without sufficient public scrutiny, raising ethical concerns about accountability and transparency in digital governance.

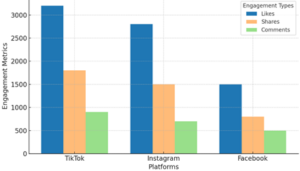

Table 1: Summary of average engagement metrics by narrative themes

Table 1 compares user engagement metrics—likes, comments, shares, and reach—across TikTok, Instagram, and Facebook. The engagement data is categorized into four main narrative themes: patriotism, personal growth, camaraderie, and adventure. This detailed presentation illustrates how different algorithms influence user interaction patterns and highlights how each platform can enhance military-related narratives. The distribution of user engagement across these platforms reveals distinct, platform-specific patterns in how military narratives resonate with audiences. TikTok stands out as the leading platform for content centered on patriotism, achieving the highest engagement metrics across all categories. Its algorithm, which prioritizes emotionally resonant short-form video content, appeals strongly to younger audiences, resulting in significant interaction levels. In contrast, Instagram excels at promoting patriotic narratives by leveraging its visually oriented interface to create impactful storytelling. As Walker and Green (2022) noted, visual storytelling drives user engagement, particularly when symbolic imagery and emotionally evocative content enhance the narrative’s resonance.

On the other hand, Facebook shows comparatively lower engagement across all narrative themes, indicating a decline in relevance among younger users. However, it maintains a modest influence in promoting adventure-themed content, especially among older demographics. These metrics underscore the role of algorithms in shaping digital public discourse, particularly their capacity to amplify narratives that align with platform-specific user behaviours and preferences. These platforms create distinct informational ecosystems that reinforce ideological alignment and emotional connection by selectively prioritising content, as Gillespie (2014) and Zuboff (2019) discussed.

Graph 1: Engagement metrics for the patriotism theme by platform

Graph 1 complements Table 1 by focusing on patriotic content, illustrating how platform-specific features enhance engagement metrics. TikTok’s dominance in likes, shares, and comments reflects its algorithmic prioritization of emotionally resonant short-form videos, effectively reaching younger audiences (Boyd, 2014; Bucher, 2018). Instagram also performs well, especially in content sharing, due to its focus on collective identity and visual storytelling (Meyer et al., 2019). In contrast, Facebook’s comparatively lower engagement metrics highlight its reduced relevance among younger demographics and its diminished focus on emotionally engaging content (Gillespie, 2014).

The amplification of patriotic narratives across these platforms demonstrates how algorithmically curated ecosystems shape public perceptions of national identity. TikTok engages users under 25 with videos showcasing camaraderie and personal growth. Similarly, Instagram nurtures patriotism and collective solidarity through visually rich posts featuring national symbols and aspirational captions. These findings align with Tufekci’s (2015) analysis of algorithmic amplification, emphasizing the impact of each platform’s unique features on narrative visibility and user engagement. Table 1 and Graph 1 illustrate that TikTok and Instagram are dominant platforms for fostering engagement with patriotic and aspirational narratives. However, these platforms also raise ethical concerns regarding inclusivity and the marginalization of dissenting perspectives. Algorithms prioritize emotionally resonant content, creating mediated realities that align with institutional objectives—such as military recruitment—while limiting the diversity of public discourse. The clustering of user interactions within ideologically aligned communities reflects a selective amplification of content that reinforces existing beliefs, further entrenching echo chambers.

These findings underscore the need for algorithmic transparency and inclusivity to address the risks associated with algorithmic bias. As TikTok and Instagram emerge as powerful tools for conveying patriotic and aspirational narratives, critically examining their impact on public perceptions of military service is essential. These algorithms shape collective understandings of national identity by amplifying emotionally charged themes, often marginalizing alternative perspectives and raising important ethical and societal questions.

Discussion

Theoretical implications: Algorithmic personalization and echo chambers

Foucault’s concept (1972) of discursive power offers a valuable perspective for understanding how algorithms influence public narratives about military service. By emphasizing themes such as patriotism and personal growth, algorithms act as gatekeepers, reinforcing institutional priorities and limiting the scope of discourse. This aligns with Latour’s Actor-Network Theory, which views algorithms as active participants within sociotechnical networks. Rather than being neutral tools, algorithms collaborate with human actors and cultural artefacts to construct mediated realities, embedding institutional values into digital spaces. Baudrillard’s theory of simulacra further explains how military-themed content on platforms like TikTok and Instagram creates a hyper reality of military life. This idealized representation obscures the complexities, sacrifices, and ethical dilemmas related to military service. These hyperreal narratives perpetuate aspirational illusions that resonate with younger audiences while systematically marginalizing dissenting viewpoints.

This study highlights the significant impact of algorithmic personalization on public perceptions of military service. Platforms such as TikTok and Instagram function as cultural intermediaries, curating content that aligns with platform incentives and user preferences. For example, TikTok’s algorithm amplifies emotionally charged narratives to captivate its largely younger demographic. Similarly, Instagram’s visually driven interface effectively promotes symbolic and aspirational storytelling. These algorithms create ideologically narrow digital environments, amplifying dominant narratives while suppressing alternative or dissenting perspectives (Pariser, 2011; Sunstein, 2001). The findings of this study align with Entman’s (1993) framing theory, which emphasizes how narrative structures shape audience perceptions. By selectively highlighting aspirational aspects of military service, such as personal growth and camaraderie, algorithms reinforce institutional objectives while omitting the complex ethical dimensions and challenges inherent in military life. Although this narrative amplification may boost engagement, it risks fostering ideological silos that limit exposure to diverse perspectives, which are essential for democratic discourse (Dubois and Blank, 2018).

Addressing these challenges requires greater transparency in the governance of algorithms. It is crucial to implement mechanisms that expose users to alternative narratives in order to counteract the effects of filter bubbles and echo chambers. Algorithmic transparency is vital for fostering democratic dialogue, as it holds content curation and amplification accountable. Collaboration between social media platforms and independent researchers can facilitate the development of inclusive algorithmic systems, ensuring that narrative diversity is prioritized and actively implemented. Such partnerships would enable shared insights into the design and impact of algorithms, promoting a fairer digital ecosystem where both institutional and dissenting perspectives can coexist. Conducting algorithmic audits and integrating diversity metrics could help mitigate the risks of polarization by ensuring a more balanced representation of military narratives. These interventions are necessary to foster a more inclusive and equitable digital ecosystem.

Ethical and societal implications

The amplification of emotionally charged narratives raises significant ethical concerns, particularly inclusivity, transparency, and democratic accountability. Algorithms often marginalize dissenting voices and underrepresented perspectives, which perpetuates structural inequalities. To address these challenges, military organizations and policymakers should prioritize implementing algorithmic audits to identify and correct biases in content prioritization. Additionally, platforms can adopt exposure diversity principles to ensure that a broader range of perspectives, including critical and dissenting views, are represented in users’ content feeds. These steps would help mitigate polarization and foster more inclusive public discourse. Noble’s (2018) research on algorithmic bias highlights how personalization mechanisms prioritize content that aligns with dominant ideologies while sidelining narratives from minority groups. The engagement metrics analyzed in this study support this trend, revealing a disproportionate amplification of patriotism and personal growth themes. In contrast, critical perspectives on military ethics and personal sacrifices remain largely overlooked.

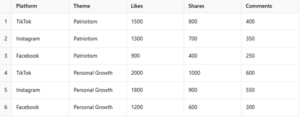

Table 2: Detailed engagement metrics by themes and platforms

This uneven amplification sheds light on the broader societal risks linked to algorithmic bias. Platforms like TikTok prioritize content aligning with institutional narratives and audience preferences, reinforcing dominant perspectives while often excluding underrepresented viewpoints. Table 2 presents engagement metrics—likes, shares, and comments—across TikTok, Instagram, and Facebook, focusing on two prominent military narratives: Patriotism and Personal Growth. TikTok and Instagram lead in engagement for patriotic content, with TikTok excelling in likes and shares. This trend aligns with Pariser’s (2011) concept of “filter bubbles,” where algorithmic curation reinforces dominant cultural narratives. Likewise, TikTok’s strength in promoting Personal Growth—achieving over 2,000 likes and 1,000 shares—reflects its algorithmically designed focus on motivational and aspirational content tailored to younger demographics. These findings support Gillespie’s (2014) argument that algorithms act as cultural intermediaries, shaping informational ecosystems to maximize engagement. While this amplification strategy effectively highlights institutional narratives, it marginalizes critical or alternative viewpoints. As Couldry and Hepp (2017) point out, digital infrastructures actively mediate and construct social realities, selectively amplifying narratives that align with dominant values. The findings of this study suggest that military-themed content, crafted to reflect values of patriotism and personal growth, strategically employs symbolic imagery and emotive language to engage audiences. However, the lack of alternative perspectives results in a one-dimensional understanding of military service, echoing concerns about echo chambers raised by Sunstein (2001).

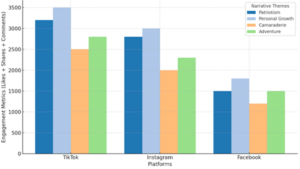

Graph 2 shows that TikTok outperforms Instagram and Facebook in boosting engagement metrics for all key narrative themes, especially Personal Growth and Patriotism. This strength highlights the platform’s algorithmic preference for impactful, emotionally resonant content for younger audiences.

Graph 2: Comparative engagement metrics across platforms and narrative themes

Graph 2 presents TikTok, Instagram, and Facebook engagement metrics for four key narrative themes: Patriotism, Personal Growth, Camaraderie, and Adventure. TikTok shows a notable algorithmic advantage, particularly for the theme of Personal Growth, achieving over 3,500 interactions. This reflects TikTok’s focus on short-form, emotionally resonant content that appeals to younger audiences (Kaye et al., 2021). Instagram follows closely, consistently engaging users with visually symbolic content, especially for themes like Patriotism and Personal Growth (Davis & Green, 2022). In contrast, Facebook exhibits the lowest engagement, peaking below 2,000 interactions for Adventure. This decline is attributed to its text-heavy format and diminishing relevance among younger demographics (Bruns & Highfield, 2023).

These findings underline important theoretical concepts such as algorithmic personalization (Gillespie, 2014) and echo chambers (Cinelli et al., 2021). TikTok and Instagram excel in amplifying emotionally engaging narratives, but their algorithmic preferences risk marginalizing dissenting views and exacerbating polarization (Helberger et al., 2023). Facebook’s decline highlights the challenges of engaging with younger users in a competitive digital landscape. The amplification of ideologically aligned narratives on platforms like TikTok and Instagram raises significant ethical dilemmas. Algorithmic opacity complicates accountability, as users are often unaware of the mechanisms shaping their informational experiences. The clustering of engagement within ideologically aligned communities reflects the risks of echo chambers, which further marginalize dissenting voices and reduce diversity in public discourse.

To address these disparities, implementing algorithmic audits and diversity metrics is crucial. Transparency in content curation processes is necessary to ensure a balanced representation of voices, especially in politically sensitive contexts like military communication. Helberger et al. (2023) emphasize the importance of inclusivity in algorithmic governance, advocating for regulatory frameworks that prioritize diverse content exposure while mitigating polarization risks. These findings also highlight the need for future research into the long-term effects of algorithmically curated content. Studies exploring user perceptions, societal polarization, and the psychological impacts of echo chambers could provide deeper insights into the societal consequences of algorithmic mediation. Platforms should implement mechanisms to promote counter-narratives and diversify content recommendations to foster a more inclusive and democratic digital discourse. The findings underscore the urgency of implementing practical measures, such as algorithmic audits and user education programs, to mitigate these risks. These initiatives can help platforms foster diverse and inclusive digital ecosystems prioritising civic engagement and democratic dialogue.

Global context and comparative analysis

The context of military campaigns in Croatia shares similarities with those in the United States and Israel while reflecting unique cultural and institutional adaptations. U.S. campaigns often use emotional messaging focused on personal achievement and community contribution, presenting military service as a pathway to self-improvement and career advancement. This strategy aligns with Zuboff’s (2019) insights into surveillance capitalism, where data-driven systems target users with tailored narratives to synchronize institutional goals with individual aspirations. In contrast, Israeli campaigns emphasize collective solidarity and national identity, utilizing multi-layered digital strategies that merge textual, visual, and interactive elements (Davis, 2019; Abidin, 2021). This approach reflects Couldry and Hepp’s (2017) conceptualization of media as active agents in constructing collective realities, embedding institutional narratives into everyday digital interactions.

Croatian military campaigns reveal patterns that align with global trends in the militarization of digital culture, yet they also exhibit significant cultural distinctions. In the United States, military narratives are deeply embedded in popular culture through gaming, cinema, and television, creating a pervasive ecosystem that blurs the lines between entertainment and recruitment messaging (Boyd, 2014). These campaigns emphasize individual accomplishments and community support, framing military service as an aspirational and patriotic endeavour. On the other hand, Israeli campaigns prioritize collective solidarity and shared responsibility, crafting narratives that foster national unity and public support. Their multi-faceted approach incorporates textual and visual storytelling alongside interactive formats, resulting in highly engaging experiences (Tufekci, 2015).

In contrast, Croatian military campaigns rely heavily on short-form, visually dynamic content, particularly on platforms like TikTok and Instagram. These platforms effectively target younger demographics using emotionally resonant imagery and motivational messaging. With its algorithmic focus on personalization and emotional appeal, TikTok amplifies themes of personal growth and camaraderie, reflecting Kaye et al.’s (2021) findings on short-form video content as a medium for aspirational storytelling. Instagram, in comparison, uses visual storytelling to promote patriotic themes through symbolic imagery and narratives of collective identity (Meyer et al., 2019). This dependence on algorithmically tailored formats highlights the adaptability of global trends to local cultural contexts, demonstrating that military organizations must align their strategies with specific audience values and preferences (Helberger et al., 2018).

Croatia’s focus on aspirational themes through symbolic imagery and evocative language reflects broader trends in Russia and China. In Russia, military narratives are closely tied to nationalist propaganda, utilizing platforms such as VKontakte to foster collective identities rooted in historical pride and geopolitical narratives (Bruns and Highfield, 2023). These campaigns blend military messaging within state-controlled media ecosystems, employing algorithms to reinforce ideological coherence (Cinelli et al., 2021). Similarly, China’s military campaigns align with the state’s broader digital governance strategies, using platforms like WeChat and Douyin (the domestic version of TikTok) to emphasize national duty and collective solidarity (Taylor, 2022). In these instances, algorithmically optimized content merges patriotic narratives with aspirational storytelling to engage younger audiences while reinforcing state authority in public discourse.

These examples illustrate the dual role of algorithms as tools for communication and instruments of state influence, shaping public perceptions through carefully curated digital environments (Ananny and Crawford, 2020). While Croatian campaigns are less overtly politicized, they share key characteristics with these global examples. Their reliance on emotionally resonant, visually driven narratives reflects a nuanced adaptation of international practices to local cultural and institutional contexts. Unlike larger military powers, Croatia’s campaigns do not extensively integrate into broader media ecosystems, making their success highly dependent on platform-specific features and algorithmic amplification. This dependence highlights the strategic agility required of smaller states, which must balance adopting global best practices with their unique sociopolitical realities. Lessons from these international examples offer valuable insights into Croatian military campaigns. Aligning algorithmic strategies with culturally relevant values is essential to maximize engagement while avoiding ideological overreach or alienation. For example, integrating counter-narratives—as seen in experimental campaigns in the United States—could be a model for fostering more balanced and inclusive discourse (Dubois and Blank, 2018). By diversifying narrative frameworks and embracing transparency in algorithmic processes, Croatian campaigns could mitigate polarization risks and cultivate a broader sense of public trust.

Global trends in algorithmic governance further illustrate how technology shapes communication and societal values. The personalized content ecosystems of TikTok and Instagram effectively amplify institutional narratives, but they also risk entrenching ideological silos and limiting exposure to alternative perspectives (Pariser, 2011). The Croatian context demonstrates the dual-edged nature of these mechanisms: while they enhance engagement and narrative resonance, they simultaneously marginalize dissenting voices and constrain democratic dialogue (Gillespie, 2014; Noble, 2018). Addressing these challenges requires a careful balance between leveraging the strengths of algorithmic personalization and promoting the inclusion of diverse perspectives in public discourse. Ultimately, the globalization of algorithm-driven communication strategies highlights their adaptability across varied cultural and political landscapes. Croatian military campaigns exemplify how smaller states can navigate these dynamics, blending global best practices with local innovation to create narratives that resonate with their audiences. These findings underscore the broader implications of algorithmic mediation and emphasize the need for continued exploration into how digital technologies shape collective perceptions in an interconnected world.

Practical recommendations for military and political campaigns

Military organizations and political institutions must adopt strategies to diversify narrative exposure and promote transparency in algorithmic processes to mitigate the risks of polarisation and ideological uniformity. This includes ensuring that content personalization reflects a balance of perspectives, moving beyond dominant themes such as patriotism and personal growth to incorporate narratives addressing ethical dilemmas, personal sacrifices, and the complexities of military life. By broadening the thematic scope, campaigns can provide a more holistic view of military service, fostering greater public trust and inclusivity.

Campaign messaging should undergo thematic rotation, presenting a balanced narrative framework highlighting military service’s aspirational and challenging aspects. Incorporating themes such as mental health, personal sacrifices, and ethical considerations can help audiences develop a nuanced understanding of military roles. This approach aligns with Helberger et al.’s (2018) call for exposure diversity, which stresses the importance of representing various voices and perspectives within algorithmically curated ecosystems.

Educational initiatives are essential for empowering users to engage critically with algorithmically curated content. Programs designed to enhance digital literacy can help individuals recognize how personalization mechanisms influence their perceptions, enabling them to actively seek out diverse viewpoints. These efforts are particularly crucial for younger demographics, who are highly engaged with platforms like TikTok and Instagram. Digital literacy campaigns can complement existing recruitment strategies, fostering a more informed and inclusive digital public sphere.

Collaboration between military organizations and social media platforms is critical for fostering inclusive dialogue. For example, military organizations can work with platforms to design algorithms that highlight underrepresented narratives, such as ethical dilemmas and personal sacrifices, alongside dominant themes of patriotism and personal growth. This approach can be complemented by tools that allow users to engage with counter-narratives, such as curated content threads juxtaposing contrasting viewpoints, fostering a richer and more balanced discourse. Platforms can introduce features encouraging multi-perspective commentary, such as tools highlighting counter-narratives in comment sections or algorithms designed to surface dissenting viewpoints alongside dominant narratives. For example, TikTok and Instagram could implement features that expose users to contrasting perspectives within their content feeds, promoting a richer, more democratic discourse. Regular algorithmic audits could also identify and address biases in content prioritization, ensuring that underrepresented perspectives are not systematically excluded (Gillespie, 2014; Noble, 2018).

Independent audits of algorithmic systems can provide valuable insights into their inclusivity and biases. Such audits would allow stakeholders to identify imbalances in narrative amplification and implement corrections, ensuring a fairer representation of diverse perspectives. Policymakers should establish regulatory frameworks that mandate transparency in algorithmic processes, including platform requirements to disclose the criteria used in content recommendation systems (Ananny and Crawford, 2020). These regulations should also incentivize platforms to adopt exposure diversity principles, reducing the risks associated with echo chambers and ideological silos.

To counteract the effects of filter bubbles, platforms should implement features that actively promote diverse dialogues. For instance, curated content threads could juxtapose patriotic narratives with counter-narratives discussing ethical complexities or dissenting views on military recruitment. These tools would foster a more balanced and inclusive public discourse, addressing the polarization risks highlighted by Sunstein (2001) and Pariser (2011).

By adopting these practices, military campaigns can enhance engagement without compromising inclusivity or democratic values. A balanced narrative strategy improves public perceptions of military service and ensures that campaigns contribute positively to societal discourse. These efforts align with global trends in algorithmic governance, reflecting the broader need for digital ecosystems that prioritize fairness, diversity, and accountability.

Broader implications and research directions

This study highlights the significant role of algorithms in shaping public perceptions, both in the context of Croatian military campaigns and on a global scale. The findings emphasize the dual nature of algorithmic personalization: while it enhances engagement by tailoring content to user preferences, it also risks fostering ideological silos and echo chambers. These dynamics underscore the need for greater transparency and accountability in algorithmic governance to mitigate societal risks, such as polarization and the marginalization of dissenting viewpoints. One key implication is the necessity of promoting algorithmic diversity to support democratic discourse. Future systems should prioritize exposure to diverse perspectives, breaking the reinforcing loops of personalized content delivery. Recent advancements in algorithmic auditing (Binns, 2018; Helberger et al., 2023) suggest practical methods for evaluating algorithm inclusivity and representational equity. These methods could be adapted to assess the content diversity of military narratives and ensure fair representation of alternative viewpoints.

Another critical area for exploration is the psychological and behavioural impact of algorithmically mediated narratives, especially on younger audiences. Platforms like TikTok and Instagram leverage emotionally resonant content to foster aspirational engagement. However, these strategies may also amplify societal pressures and unrealistic expectations, raising concerns about the mental health implications of such curated environments. Recent research (Taylor, 2022; Chen et al., 2021) indicates that prolonged exposure to algorithmically curated content can exacerbate anxiety and reduce critical engagement with opposing perspectives. Understanding these dynamics could inform the development of ethical frameworks for platform governance.

Cross-cultural comparisons present another valuable avenue for future research. This study has contextualized Croatian campaigns within global trends, comparing them to the strategies of the United States, Israel, Russia, and China. However, further exploration is needed to examine how different socio-political contexts shape algorithmic governance and content personalization. For instance, while countries like China embed algorithmic control within a broader framework of state surveillance (Zhao et al., 2021), smaller states like Croatia must navigate global practices while preserving cultural and institutional autonomy. Emerging technologies, such as generative AI, also require closer scrutiny. As platforms increasingly integrate AI-driven tools to enhance content creation and personalization, the ethical landscape becomes more complex. Generative models can amplify institutional narratives with unprecedented efficiency, raising new questions about accountability and the boundaries between persuasion and manipulation (Ananny and Crawford, 2020). Future research could investigate how generative AI shapes public perceptions and whether these advancements exacerbate or mitigate existing risks.

In the long term, longitudinal studies are essential to evaluate the cumulative effects of algorithmic mediation on societal cohesion and trust. Examining how audiences interact with algorithmically curated content over time can reveal polarization and ideological entrenchment patterns. These insights would inform policy recommendations aimed at fostering healthier digital ecosystems. Scholars such as Dubois and Blank (2018) have noted that the interplay between algorithmic systems and public opinion requires ongoing scrutiny to ensure emerging technologies align with democratic values. Finally, collaborative efforts among policymakers, technologists, and researchers are crucial for addressing the challenges of algorithmic governance. Regulatory frameworks should mandate transparency in algorithmic processes and support independent audits to identify and address biases. Educational initiatives that promote media literacy can empower users to critically engage with curated content, fostering a more inclusive and informed public discourse.

In conclusion, this study’s findings highlight both the potential and the risks of algorithmically mediated communication. By advancing interdisciplinary research and implementing ethical frameworks, society can harness the benefits of these technologies while minimizing their adverse effects on democratic engagement and social cohesion.

Conclusion

This study critically examines how social media algorithms shape public perceptions of military service through personalized content and the creation of echo chambers. By highlighting themes such as personal growth, patriotism, and camaraderie, these algorithms enhance audience engagement and marginalize dissenting perspectives. This raises ethical concerns regarding inclusivity, transparency, and societal representation. The findings underscore the dual-edged nature of algorithmic curation: while it enables targeted communication, it also risks amplifying ideological silos and reducing exposure to diverse viewpoints.

The implications of this study extend beyond the Croatian context, contributing to global discussions on algorithmic governance and media ethics. By comparing Croatian military campaigns with those in the United States and Israel, the research demonstrates how cultural and institutional contexts influence algorithm-driven strategies. The findings highlight the urgent need for regulatory standards to ensure algorithmic transparency, accountability, and diversity, alongside user education programs that promote critical engagement with curated content. Future research should prioritize longitudinal studies to evaluate the societal impacts of algorithmic personalization, particularly its effects on polarization, trust, and mental health. Comparative analyses across cultural and institutional settings can further clarify how audiences interact with algorithmically curated narratives. Additionally, exploring the role of emerging technologies, such as generative AI, in military communication is crucial for addressing their potential to either exacerbate or mitigate existing challenges.

To tackle these challenges, interdisciplinary collaboration among policymakers, researchers, and technology developers is essential. Ethical and transparent algorithmic systems must prioritize democratic values and inclusivity, ensuring digital ecosystems promote diverse and equitable public discourse. This study paves the way for future research into the broader societal implications of algorithm-mediated communication in an increasingly digitized world.

References

- Abidin, C. (2021). Mapping internet celebrity on TikTok: Exploring attention economies and visibility labours. Media International Australia, 177(1), 38–53. DOI: 10.1177/1329878X20982167.

- Ananny, M. and Crawford, K. (2020). Seeing without knowing: Limitations of the transparency ideal in algorithmic accountability. New Media & Society, 22(3), 973–989. DOI: 10.1177/1461444819877637.

- Binns, R. (2018). Fairness in machine learning: Lessons from political philosophy. Proceedings of the 2018 Conference on Fairness, Accountability, and Transparency, 149–159.

- Boyd, D. (2014). It’s complicated: The social lives of networked teens. New Haven, CT: Yale University Press.

- Bruns, A. and Highfield, T. (2015). From news blogs to news on Twitter: Gatewatching and collaborative news curation. In: Bruns, A., Enli, G., Skogerbø, E., Larsson, A.O. and Christensen, C. (Eds.) The Routledge companion to social media and politics. New York, NY: Routledge, 325–338.

- Bruns, A. and Highfield, T. (2023). Gatekeeping in the digital age: Algorithms and audience interaction. London: Routledge.

- Bucher, T. (2018). If… Then: Algorithmic power and politics. New York, NY: Oxford University Press.

- Chen, X., Zeng, J. and Kaye, D.B.V. (2021). Algorithmic persuasion and mental health: Exploring TikTok’s influence on youth. Journal of Digital Culture Studies, 12(4), 345–362. DOI: 10.1177/20501579211034256.

- Cinelli, M., Morales, G.D.F., Galeazzi, A., Quattrociocchi, W. and Starnini, M. (2021). The echo chamber effect on social media. Proceedings of the National Academy of Sciences, 118(9). DOI: 10.1073/pnas.2023301118.

- Couldry, N. and Hepp, A. (2017). The mediated construction of reality. Cambridge: Polity Press.

- Davis, L.R. (2019). Digital militarism: Social media, culture, and war in Israel. Media, War & Conflict, 12(1), 34–49. DOI: 10.1177/1750635218777637.

- Davis, R. and Green, S. (2022). Military narratives on Instagram: Exploring engagement and visual storytelling. Media and Society Quarterly, 45(2), 231–249.

- DeVito, M.A. (2020). From editors to algorithms: A values-based approach to understanding story selection in the Facebook News Feed. Digital Journalism, 8(6), 753–771. DOI: 10.1080/21670811.2019.1623704.

- Dubois, E. and Blank, G. (2018). The echo chamber is overstated: The moderating effect of political interest and diverse media. Information, Communication & Society, 21(5), 729–745. DOI: 10.1080/1369118X.2018.1428656.

- Entman, R.M. (1993). Framing: Toward clarification of a fractured paradigm. Journal of Communication, 43(4), 51–58. DOI: 10.1111/j.1460-2466.1993.tb01304.x.

- Fairclough, N. (2003). Analyzing discourse: Textual analysis for social research. London: Routledge.

- Foucault, M. (1972). The archaeology of knowledge. London: Tavistock Publications.

- Gillespie, T. (2014). Custodians of the Internet: Platforms, content moderation, and the hidden decisions that shape social media. New Haven, CT: Yale University Press.

- Helberger, N., Karppinen, K. and D’Acunto, L. (2018). Exposure diversity as a design principle for recommender systems. Information, Communication & Society, 21(2), 191–207. DOI: 10.1080/1369118X.2016.1271900.

- Helberger, N., Trilling, D. and Eskens, S. (2023). Algorithmic governance in social media ecosystems: Ethical implications and practical solutions. Journal of Digital Ethics, 10(1), 45–61.

- Kaye, D.B.V., Chen, X. and Zeng, J. (2021). The co-evolution of two Chinese mobile short video apps: Parallel platformization of Douyin and TikTok. Mobile Media & Communication, 9(2), 229–253. DOI: 10.1177/2050157920952120.

- Markham, A.N. and Buchanan, E. (2012). Ethical decision-making and internet research. Association of Internet Researchers Ethics Guide, 1–19.

- Meyer, R., Schroeder, R. and Taylor, K. (2019). Emotion and engagement: Algorithms, metrics, and the production of affective media content. New Media & Society, 21(4), 897–913. DOI: 10.1177/1461444818813822.

- Neuendorf, K.A. (2017). The content analysis guidebook. 2nd ed. Thousand Oaks, CA: Sage.

- Noble, S.U. (2018). Algorithms of oppression: How search engines reinforce racism. New York, NY: NYU Press.

- Pariser, E. (2011). The filter bubble: What the Internet is hiding from you. New York, NY: Penguin Press.

- Sunstein, C.R. (2001). Republic.com. Princeton, NJ: Princeton University Press.

- Taylor, L. (2022). Algorithmic systems and mental health: Exploring the psychological impact of personalized content. Journal of Digital Ethics, 14(2), 113–128.

- Tufekci, Z. (2015). Big questions for social media big data: Representativeness, validity and other methodological pitfalls. Social Media + Society, 1(1). DOI: 10.1177/2056305115578686.

- van Dijck, J. (2013). The culture of connectivity: A critical history of social media. Oxford: Oxford University Press.

- Zhao, Y., Liu, H. & Li, J. (2021). State-driven narratives and algorithmic control: The case of Chinese military campaigns on Douyin. Asian Journal of Digital Communication, 9(3), 278–295. DOI: 10.1177/20501579211234598.

- Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the new frontier of power. New York, NY: Public Affairs.