Publication Information

ISSN 2691-8803

Frequency: Continuous

Format: PDF and HTML

Versions: Online (Open Access)

Year first Published: 2019

Language: English

| Journal Menu |

| Editorial Board |

| Reviewer Board |

| Articles |

| Open Access |

| Special Issue Proposals |

| Guidelines for Authors |

| Guidelines for Editors |

| Guidelines for Reviewers |

| Membership |

| Fee and Guidelines |

|

Hybrid Media Strategies: Shaping Public Opinion in The Digital Age

Gombar Marija*

Centre for Defence and Strategic Studies “Janko Bobetko”

PhD student, University North, Media and Communication

E-mail: gombar.ma@gmail.com, magombar@unin.hr

Received Date: November 26, 2024; Accepted Date: December 04, 2024; Published Date: December 09, 2024;

*Corresponding author: Marija Gombar, Centre for Defence and Strategic Studies “Janko Bobetko”; PhD student, University North, Media and Communication; E-mail: gombar.ma@gmail.com, magombar@unin.hr, Phone: +385989709793

Citation: Marija G (2024) Hybrid Media Strategies:Shaping Public Opinion in The Digital Age2015–2016 Adv Pub Health Com Trop Med: MCADD-117.

DOI: 10.37722/APHCTM.2024206

Abstract

The convergence of military and civilian media strategies on social networks has transformed these platforms into hybrid spaces that significantly influence public opinion and societal dynamics. Initially intended for interpersonal communication, social networks now serve as tools for strategic propaganda, cognitive manipulation, and information dominance. Algorithms are central to this transformation, tailoring content to individual preferences and fostering “filter bubbles” that reinforce biases. Research indicates that disinformation spreads 70% faster than factual content, driven by emotionally charged narratives that provoke fear, anger, or surprise, significantly increasing virality and engagement. Such dynamics contribute to a fragmented digital ecosystem where polarization is amplified, critical thinking is diminished, and democratic processes are jeopardized. Symbolic language and framing techniques, such as the use of hashtags and targeted narratives, further manipulate perceptions. For instance, during the COVID-19 pandemic, algorithmic prioritization of specific narratives shaped public opinion, while deepfake technologies proliferated distrust and confusion. Prolonged exposure to such content has been linked to rising levels of anxiety and depression, particularly among younger demographics, with 59% of teens reporting increased stress due to misinformation on social media platforms. This study underscores the urgency of ethical interventions to regulate algorithmic systems, mitigate disinformation, and protect user autonomy. Implementing robust transparency measures, enhancing content moderation, and promoting digital literacy are essential to restoring trust and preserving democratic values. Without such efforts, social networks risk becoming instruments of manipulation rather than platforms for constructive societal engagement.

Keywords: algorithms; digital manipulation; ethical implications; hybrid warfare; information dominance; media polarization; public opinion; social media strategies.

Introduction

In the digital age, the distinction between military and civilian media theories is becoming increasingly blurred, especially within the evolving landscape of social networks. Originally created to facilitate personal communication and social interaction, these platforms have quickly transformed into powerful instruments for influence, manipulation, and strategic communication. This convergence of military and civilian media strategies highlights the dual functionality of social networks: while they enable cultural and social exchange in civilian contexts, they also serve as tools for achieving military and political objectives through the dissemination of propaganda, information dominance, and cognitive manipulation (Freedman, 2017; Pariser, 2011). Military media theories traditionally emphasize strategic communication, focusing on information management, psychological influence, and disinformation to achieve security or political objectives. Meanwhile, civilian media theories examine the social, cultural, and cognitive impacts of media, exploring its role in shaping public opinion and social values (Gillespie, 2014; Tufekci, 2017). However, advancements in technology and the rise of algorithmic systems have facilitated the integration of these approaches, transforming social networks into hybrid media spaces where military and civilian strategies intersect. This intersection poses significant ethical, social, and democratic challenges that necessitate a critical exploration of their implications (Zuboff, 2019).

Algorithms play a central role in this hybridization, acting as gatekeepers of information and mediators of user experience. Designed to enhance user engagement, algorithms curate content that is tailored to individual preferences, creating personalized information ecosystems often referred to as “filter bubbles” (Pariser, 2011). While these systems can improve user satisfaction, they also amplify societal polarization by reinforcing pre-existing beliefs and limiting exposure to diverse perspectives (Sunstein, 2001). In military contexts, algorithms are repurposed to target specific demographic groups with propaganda and disinformation, manipulating public perception to align societal values with strategic objectives (Allcott & Gentzkow, 2017). This dual functionality highlights the ethical dilemmas associated with algorithmic technologies, particularly regarding their impact on privacy, autonomy, and trust in democratic institutions. The framing of information and the strategic use of symbolic language further complicate this landscape. Techniques such as news framing and narrative construction manipulate public perceptions by emphasizing certain aspects of reality while downplaying others (Entman, 1993; Hall, 1997). For instance, during the Russia-Ukraine conflict, hashtags like #StandWithUkraine and #StopRussianAggression were employed by grassroots movements and state-led campaigns alike to shape public discourse and counter misinformation (EEAS, 2022). These practices illustrate how symbolic language and framing can blur the lines between genuine public sentiment and orchestrated propaganda, reinforcing the need for greater transparency and regulatory oversight.

The convergence of military and civilian strategies within social networks has profound implications for democratic processes, social cohesion, and psychological well-being. Exposure to manipulative content, including disinformation and emotionally charged narratives, undermines users’ ability to critically evaluate information, fostering distrust in media and institutions (Kahneman, 2011; Wardle & Derakhshan, 2017). This manipulation distorts public opinion and exacerbates societal polarization, creating echo chambers that hinder constructive dialogue and compromise the inclusivity of democratic discourse (Noelle-Neumann, 1974). This study explores the intersection of military and civilian media strategies within the context of social networks, examining their ethical, psychological, and societal implications. By analyzing algorithms, narrative framing, and the use of symbolic language, it seeks to illuminate the mechanisms through which public opinion is shaped and manipulated in the digital age. The findings underscore the urgency of establishing ethical guidelines and regulatory frameworks to mitigate the risks posed by hybrid media strategies, safeguard democratic values, and promote transparency in the use of personal data (Zuboff, 2019). This analysis provides a foundation for understanding the complexities of hybrid media environments and their impact on public perception and societal trust.

Methodology

This study uses a qualitative research approach to explore integrating military and civilian communication strategies within social media platforms, focusing on their effects on public opinion and ethical challenges. The methodology consists of three interconnected stages: a critical literature review, an analysis of illustrative case studies, and the synthesis of theoretical frameworks. Together, these elements provide a comprehensive understanding of how algorithms, misinformation, and content manipulation function in a hybrid media environment. The first stage involves an extensive review of existing academic literature, reports, and theoretical works to establish a foundation for the analysis. Central to this phase is the examination of key concepts such as filter bubbles, echo chambers, and personalized algorithms, as outlined by Eli Pariser, as well as the dynamics of information dominance described by David Freedman. This review also incorporates theories of hybrid warfare, cognitive manipulation, and the role of psychological influence in shaping public opinion through digital media. By integrating insights from scholars such as Elisabeth Noelle-Neumann and Shoshana Zuboff, the study captures the complex nature of algorithmic manipulation and its implications for democratic systems.

The second stage focuses on analyzing specific case studies to illustrate the practical application of hybrid media strategies. Examples include using social networks in political campaigns, spreading disinformation during the COVID-19 pandemic, and employing deepfake technologies in geopolitical contexts. These cases were chosen for their relevance to the study’s objectives and their potential to demonstrate the convergence of military and civilian media theories. Publicly available datasets and institutional reports provided the basis for this analysis, with thematic coding used to identify patterns in algorithmic targeting, content framing, and user engagement. Examining how these strategies operate across different contexts reveals recurring themes of polarization, emotional manipulation, and the erosion of trust in media. The final stage involves synthesizing literature and case study findings to construct a theoretical model reflecting media strategy hybridization. This synthesis highlights the dual role of algorithms in serving both commercial and military objectives, emphasizing their capacity to influence perceptions and behavior. The study also identifies these practices’ ethical and psychological implications, particularly the tension between user autonomy and the covert manipulation of information. The analysis contributes to an evolving understanding of the interplay between digital technologies, societal values, and strategic communication by situating these insights within broader theoretical frameworks.

Throughout the research process, ethical considerations were prioritized. The study relied exclusively on publicly available data and refrained from collecting personal or identifiable information. Additionally, care was taken to ensure the accuracy and credibility of sources, with all references drawn from peer-reviewed journals, reputable organizations, and verified reports. This commitment to ethical rigor underscores the importance of transparency and responsibility in examining the complex dynamics of hybrid media strategies.

Results

The analysis reveals a significant evolution in the role of social networks, which have transitioned from platforms for casual interaction to critical tools for influence, propaganda, and manipulation. This shift highlights their dual application in both military and civilian contexts. The findings demonstrate how algorithms, disinformation campaigns, and symbolic narratives interact to influence public opinion, shape behaviors, and create social fragmentation. Applying military media theories to social networks shows a clear pattern of leveraging algorithmic targeting for cognitive and psychological warfare strategies. For example, algorithms tailor content to individual preferences, amplifying biases and fostering emotional reactions like fear or anger. This targeted manipulation, as McIntyre (2021) described in „Post-Truth“, significantly affects user perceptions in politically charged contexts. A recent meta-analysis by Vosoughi, Roy, and Aral (2018) supports this, showing that false news spreads significantly faster than factual information, thereby highlighting the vulnerability of digital ecosystems to misinformation.

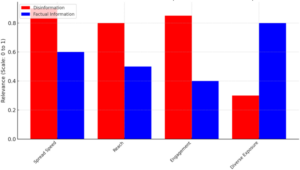

Graph 1: Disinformation vs. factual information: propagation dynamics and impact on filter bubbles

The graph above illustrates the key differences between the spread of disinformation and factual information on social media platforms. The speed and reach of disinformation significantly exceed those of factual information. According to research by Vosoughi, Roy, and Aral (2018), false news is 70% more likely to be shared than accurate news. The primary reason for this disparity is the emotionally charged nature of disinformation, which provokes reactions such as fear, anger, and surprise, thereby increasing its virality and user engagement. In contrast, factual information typically requires a more rational and analytical approach, which leads to slower dissemination and lower engagement levels. Additionally, algorithmic content curation practices contribute to forming “filter bubbles,” limiting users’ exposure to diverse viewpoints and exacerbating societal polarization. This phenomenon highlights the vulnerability of digital ecosystems to manipulation, as algorithms prioritize content designed to elicit emotional responses over content that encourages critical thinking and informed decision-making. These findings emphasize the urgent need to regulate algorithmic systems that shape the digital information landscape. Enhancing transparency and establishing ethical standards are essential steps to mitigate the influence of manipulative content and foster a more resilient digital society.

Benkler et al. (2018) discuss the concept of information dominance, demonstrating how narratives are strategically controlled on social networks. During the COVID-19 pandemic, platforms like Facebook and Twitter managed public discourse by prioritizing official narratives while minimizing alternative viewpoints (Cinelli et al., 2020). This selective visibility reflects a military technique of controlling the flow of information to align public opinion with predetermined objectives. In the realm of psychological warfare, social networks act as channels for spreading emotionally charged and polarizing content. Chesney and Citron (2019) emphasize the growing prevalence of deepfake technologies, which can provoke strong emotional reactions and foster distrust among users. In geopolitical contexts, such as the Russia-Ukraine conflict, manipulative narratives and images on platforms like Telegram have been used to dehumanize opposing groups, further deepening societal divisions (Howard et al., 2022).

Military applications of algorithms

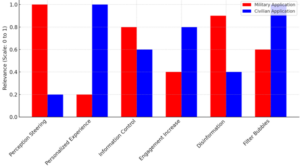

Graph 2: Military and civilian application of algorithms

The graph above highlights the fundamental differences between military and civilian applications of algorithms in the digital age, illustrating how these tools serve distinct purposes based on their context. On the left side of the graphic, military applications of algorithms are emphasized, particularly their role in strategically influencing perceptions and manipulating public opinion. A key aspect of this is perception targeting, where algorithms disseminate propaganda to specific demographic groups, fostering biased or distorted views of reality that align with military or political objectives. Furthermore, information control enables military stakeholders to selectively promote or suppress content, steering public opinion toward preferred narratives. Disinformation is another critical element, involving the manipulation of information to create intentional misconceptions among target audiences. Together, these strategies form a cohesive framework for information manipulation, empowering military actors to achieve their strategic objectives. On the right side of the graphic, civilian applications of algorithms focus on personalization and optimizing user experience. These algorithms analyze user behavior data to tailor content to individual preferences, creating an environment that aligns with users’ interests while increasing engagement—a key factor for commercial success. However, such personalization risks producing filter bubbles, which reinforce existing beliefs and reduce exposure to diverse perspectives. This phenomenon underscores the ethical challenges posed by algorithmic systems in shaping user interactions and perceptions.

Civilian applications of algorithms

The graph illustrates how algorithms are used differently in military and civilian contexts. In military settings, algorithms are utilized to influence perceptions and control information. In contrast, civilian applications focus on improving user experiences and maximizing engagement. These differing goals highlight the complex ethical responsibilities associated with algorithmic technologies. Military strategies often prioritize manipulation and control, while civilian systems are centered on personalization. However, both contexts carry risks of distortion and isolation. As noted by Total Military Insight and the NATO Strategic Communications Center of Excellence and VOX-Pol, “social media functions as a hybrid arena in which civilian and military communication strategies intertwine.” This observation emphasizes the increasing overlap between military and civilian media theories, which have traditionally served different purposes. Military theories focus on strategic and manipulative communication, while civilian theories explore cultural and social dimensions. In today’s digital landscape, this intersection has created a unique hybrid information space, necessitating further research to understand these dynamics and clearly define the boundaries between adaptive and manipulative practices.

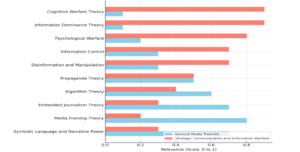

Diagram 1: Comparison of media and strategic communication theories

This diagram provides an overview of interconnected media and strategic communication theories, illustrating their roles in shaping public perception, narratives, and societal behaviors. As communication increasingly shifts to digital platforms, these theories offer essential tools for understanding cognitive warfare, information dominance, and psychological manipulation. Cognitive warfare, as defined by Corman and Schiefelbein (2006), emphasizes how carefully crafted information can exploit cognitive biases and provoke emotional responses, thereby shaping perceptions of reality. This concept closely relates to information dominance, described by Freedman (2017) as the strategic control of the information environment, guiding public attention toward selected narratives while suppressing dissenting opinions. Together, these theories demonstrate how public opinion can be influenced through informational supremacy.

Psychological warfare further develops this framework by highlighting the impact of emotionally charged content, such as fear, anger, or insecurity, in eliciting specific behavioral responses (Lasswell, 2015; Jowett & O’Donnell, 2018). Propaganda theory, pioneered by Lasswell (1927) and Ellul (1965), complements these approaches by examining the widespread dissemination of emotionally impactful messages to sway public attitudes. In the digital age, social media platforms have amplified these strategies, enabling the use of bots, sponsored posts, and targeted campaigns to manipulate global perceptions (Chesney & Citron, 2019). In civilian contexts, algorithmic systems play a crucial role. Algorithmic personalization (Gillespie, 2014) tailors content to user preferences, creating unique information flows and fostering filter bubbles (Pariser, 2011). These bubbles reinforce existing beliefs while limiting exposure to diverse perspectives, exacerbating polarization and hindering critical thinking. This dynamic aligns with media framing theory (Entman, 1993), which demonstrates how the presentation of information shapes public interpretation by emphasizing certain aspects while omitting others.

Disinformation further complicates this landscape. As noted by Allcott and Gentzkow (2017), the intentional spread of false information fosters “echo chambers,” intensifies polarization, and reduces openness to alternative viewpoints. Platforms like YouTube and Facebook amplify these effects by promoting content that aligns with user behavior. Studies, such as those by Vosoughi, Roy, and Aral (2018), have shown that false information spreads more rapidly than factual news. Moreover, content moderation algorithms designed to manage misinformation (Gillespie, 2018) present their own challenges. While these algorithms aim to reduce harmful content, they often lack transparency and may reflect the biases of those who control them (Diakopoulos, 2016). This issue is especially problematic in politically sensitive environments, where algorithmic decisions may favor powerful stakeholders.

Ultimately, this diagram highlights the dual use of digital media in military and civilian contexts. Military applications prioritize manipulation and control, while civilian uses focus on personalization and engagement. Despite these differences, the shared reliance on algorithmic systems raises ethical challenges in modern communication, particularly the need to balance technological advancements with transparency and accountability. As suggested by Total Military Insight and NATO’s Strategic Communications Center of Excellence & VOX-Pol, social media increasingly serves as a hybrid space where military and civilian communication strategies intertwine, blurring traditional distinctions between these domains.

Ethical and social implications

The findings of this study highlight significant ethical and social challenges that arise from the convergence of military and civilian media strategies within digital platforms. A central issue is the urgent need to address transparency, accountability, and privacy concerns associated with algorithmic systems. While these systems drive engagement and personalization, they also amplify disinformation, foster societal polarization, and erode trust in digital communication.

One pressing concern is the lack of transparency in algorithm design and operation. Social media platforms and technology companies must prioritize openness regarding how algorithms prioritize, curate, and disseminate content. Without transparency, users are vulnerable to manipulative practices that exploit their emotions and biases. To combat this issue, independent audits of algorithmic systems by neutral third-party organizations are essential. Such audits can identify potential biases, manipulative tendencies, and ethical violations, thereby fostering accountability and rebuilding public trust in the digital ecosystem.

Closely tied to transparency is the need for comprehensive and ethical content moderation standards. These standards must strike a careful balance between curbing harmful disinformation and upholding freedom of speech. Developing international frameworks for content moderation is vital to ensuring consistency and fairness. These frameworks should aim to remove malicious and manipulative content while not disproportionately silencing diverse perspectives or marginalizing minority voices. Data privacy is another cornerstone of ethical digital media use. The exploitation of personal data for targeted manipulation presents significant risks to user autonomy and agency. Strengthening global privacy laws to limit the use of personal data for manipulative purposes is critical. Users should have the right to control their data and opt out of algorithmic personalization if they choose. Public awareness campaigns aimed at enhancing media literacy are equally important. Educating individuals about the risks of filter bubbles, echo chambers, and disinformation can empower users to critically assess the information they encounter online. Integrating such programs into global educational systems would help create a more informed and resilient digital citizenry.

The psychological effects of algorithmic systems also demand urgent attention. Repeated exposure to emotionally charged content, such as disinformation and propaganda, has been shown to heighten feelings of fear, anger, and insecurity, contributing to emotional exhaustion and societal distrust. These effects undermine individuals’ ability to make rational decisions and participate constructively in democratic processes. Addressing this requires platforms to prioritize content that promotes critical thinking and constructive dialogue, rather than merely maximizing engagement through emotional manipulation. Regulatory and ethical solutions must also address the growing use of emerging technologies like artificial intelligence-driven deepfakes and augmented reality. These technologies have the potential to further blur the boundaries between fact and fiction, posing new challenges to public trust and media accountability. Establishing ethical frameworks for their use in both military and civilian contexts will require interdisciplinary collaboration among policymakers, technologists, and ethicists. Such frameworks must delineate the boundaries between beneficial applications of these technologies, such as in education or healthcare, and exploitative practices designed to manipulate public opinion or destabilize democratic institutions.

Ultimately, mitigating the ethical and social risks posed by algorithmic systems and hybrid media strategies requires a concerted effort across sectors. Governments, academic institutions, private sector stakeholders, and civil society organizations must work collaboratively to develop innovative and sustainable solutions. These multistakeholder initiatives should focus on fostering transparency, accountability, and ethical practices in digital media while simultaneously safeguarding democratic values and individual rights. Such collaborations are essential in ensuring that social media platforms contribute positively to global communication, trust, and social cohesion in an increasingly complex digital landscape.

Discussion

In the digital age, the distinction between military and civilian media theories is increasingly becoming unclear, particularly with the rise of algorithms. Military media theories typically focus on strategic communication, emphasizing information management and disinformation. In contrast, civilian media theories prioritize delivering information and entertainment that cater to individual user needs (Freedman, 2017). However, advancements in algorithmic technology, which aim to enhance user experiences through content personalization, are promoting a convergence between these two approaches (Gillespie, 2014). In the civilian sector, algorithms are essential for content personalization as they analyze behavioral patterns to create tailored information environments that boost user engagement (Pariser, 2011). When these algorithms are adapted for military use, they can manipulate public opinion through selective content placement and the dissemination of propaganda, effectively serving as tools for strategic influence (Allcott & Gentzkow, 2017). This dual functionality allows algorithms to be employed in both civilian and military contexts: in civilian environments, they primarily serve commercial interests, while in military settings, they aim to shape perceptions according to specific objectives (Tufekci, 2015). Moreover, algorithms can have a significant collective impact, often creating “filter bubbles” that limit exposure to diverse perspectives. This limitation can increase polarization and affect public opinion (Pariser, 2011: 89). Such dynamics raise important ethical questions regarding media responsibility, privacy protection, and society’s ability to resist manipulation (Zuboff, 2019: 114).

Algorithms in media and their implications

Algorithms in digital media are essential tools for enhancing user experiences by tailoring content to individual interests, behaviors, and demographic profiles. They can be categorized into four primary functions: recommendation algorithms, personalization algorithms, echo chamber algorithms, and content detection algorithms. Each function uses different methods and techniques to create a personalized and curated user experience, thereby influencing user perceptions and engagement across digital platforms.

Diagram 2: The central role of algorithms in media theory and strategic communication

The diagram highlights the crucial role of algorithms in influencing user experiences and perceptions within general media theories, as well as theories of strategic communication and information warfare. Algorithms fulfill a dual function in the realm of digital communication: in civilian contexts, they customize content to match user interests, while in military applications, they serve to manipulate and control information. Within general media theories, algorithms enable the personalization of content, provide recommendations based on user behavior, and filter information according to individual preferences. This results in the creation of personalized “information bubbles” that can limit users’ exposure to a range of viewpoints, potentially shaping their understanding of reality (Pariser, 2011; Gillespie, 2014). In strategic communication and information warfare, algorithms act as instruments for shaping perceptions, disseminating disinformation, and managing information control. They facilitate the promotion of particular narratives and the selective filtering of information, thereby influencing public opinion to align with the strategic goals of specific actors. This can lead to a distinct reality experience and manipulate user perceptions (Allcott & Gentzkow, 2017; Freedman, 2017).

Diagram 3: Types of algorithms in media (as per the author)

The diagram illustrates how various algorithms in media personalize content based on audience interests and values, influencing user experiences. These algorithms serve specific functions that enable platforms to customize, filter, moderate, and target content according to user preferences. While they enhance engagement and provide tailored experiences, they also create challenges such as media fragmentation, polarization within echo chambers, and transparency concerns in content moderation. Recommendation algorithms, for instance, suggest content based on user interests and past interactions, as seen on platforms like YouTube, Netflix, and Spotify (Ricci et al., 2021). They primarily fall into two categories: collaborative filtering, which relies on user similarities, and content-based filtering, which focuses on the content’s characteristics. Collaborative filtering connects users with common interests, while content-based filtering recommends items that align with a user’s specific preferences.

Recommendation Algorithms

Recommendation algorithms suggest content based on users’ interests, past interactions, and preferences. Platforms like YouTube, Netflix, and Spotify use these algorithms to enhance user engagement and prolong interaction on their platforms (Ricci et al., 2021). There are two main types of recommendation algorithms: collaborative filtering and content-based filtering. Collaborative filtering groups users with similar preferences, fostering a sense of community among digital platform users. For instance, if two users consume similar content, recommendations for one user can be made for the other. In contrast, content-based filtering focuses on the characteristics of the content, tailoring recommendations to match users’ preferences; for example, it might recommend historical documentaries to users interested in history. While these algorithms do increase engagement, they can also fragment the media landscape by limiting users’ awareness of broader contexts and alternative sources.

Personalization Algorithms

Personalization algorithms enhance the user experience by adapting content to demographic and behavioral traits (Ricci et al., 2021). Demographic algorithms tailor content based on attributes like age, gender, and location. For example, younger audiences may encounter more content related to pop culture, while older users might see content that aligns with their demographic profile. Behavioral algorithms analyze user activities, including search histories and interactions, to predict preferences and customize content, encouraging longer platform engagement (Pariser, 2011). However, this approach risks creating “filter bubbles,” where users are mostly exposed to content that reinforces their beliefs, thereby reducing access to diverse perspectives (Sunstein, 2001).

Echo Chamber Algorithms

Echo chamber algorithms create closed digital communities where users primarily encounter content that aligns with their existing views. By analyzing user interactions, these algorithms curate content that strengthens group cohesion while minimizing exposure to opposing perspectives (Bakshy et al., 2015). As users begin to believe their views are the dominant ones within these communities, polarization intensifies, and the consensus within echo chambers becomes falsely reinforced, limiting opportunities for critical discourse (Pariser, 2011).

Content Detection Algorithms

Content detection algorithms play a critical role in ensuring the credibility of shared information and compliance with platform policies by moderating and reducing misinformation. Authentication algorithms assess content accuracy, removing unreliable information to protect public trust (Gillespie, 2018). These algorithms rely on external data sources and machine learning techniques to identify misinformation. Additionally, content moderation algorithms remove content that violates guidelines, such as hate speech or violent material. While these practices are essential for maintaining safe digital environments, they raise concerns about transparency and censorship, challenging the balance between content regulation and freedom of expression (Gillespie, 2018; Diakopoulos, 2016).

Media Framing Theory

Media framing theory emphasizes how the presentation of information influences audience interpretation. Introduced by Erving Goffman (1974) and expanded upon by Robert Entman (1993), the theory highlights how selective emphasis within the media shapes public understanding of events and issues. For instance, framing migration as a “threat” to security cultivates fear and negative attitudes toward migrants. This selective framing influences audience perceptions and shapes value judgments that align with strategic narratives. Consequently, media framing becomes a powerful tool for guiding public discourse and shaping social attitudes.

Symbolic Language and Narrative Power

Symbolic language, which includes images, narratives, and cultural symbols, plays a crucial role in eliciting emotional responses and shaping societal norms. Roland Barthes (1972) describes media symbols as “myths” that convey values through emotionally charged imagery. Similarly, Stuart Hall (1997) emphasizes how symbolic language directs audience identification with specific narratives, shaping collective attitudes. For example, emotionally resonant words and images can evoke strong reactions, fostering alignment with particular narratives and agendas. The interconnected theories and factors outlined here illustrate how algorithms, media framing, and symbolic language work together to influence public perception and interpretation. Through content personalization, media framing, and symbolic influence, digital platforms shape societal beliefs, values, and norms, underscoring the need for transparency and ethical accountability in algorithmic practices.

Symbolic language and narrative power

Symbolic language used on social networks—such as hashtags, memes, and viral posts—plays a crucial role in shaping collective identities and driving social movements. These platforms allow individuals and groups to express their ideologies, values, and shared goals through accessible but powerful symbols that resonate with diverse audiences. Tufekci (2017) highlights how movements like #MeToo and #BlackLivesMatter have leveraged symbolic language to enhance their global impact, fostering solidarity and encouraging meaningful collective action. These campaigns illustrate how digital semiotics—the use of visual and textual symbols—can create a sense of belonging and purpose within online communities, while also motivating offline social and political engagement. The strategic use of symbolic language is not limited to civilian movements; it is increasingly employed in military and political contexts as well. Castells (2015) describes social networks as “autonomous communication spaces” that facilitate the dissemination of targeted narratives aimed at shaping public perception. For instance, during the Russia-Ukraine conflict, hashtags like #StandWithUkraine and #StopRussianAggression were utilized by both grassroots initiatives and state-led campaigns. These efforts sought to counter disinformation, promote international solidarity, and mobilize global support (EEAS, 2022; AFUO, 2022). Such examples demonstrate the dual purpose of symbolic language, blurring the lines between genuine public sentiment and organized propaganda. The ability to convey emotionally charged messages through widely shared symbols showcases the effectiveness of symbolic language as a tool for influencing global audiences and aligning societal attitudes with strategic objectives.

Digital platforms also facilitate what Bennett and Segerberg (2012) refer to as “connective action,” where individuals align their personal values with broader collective goals. By adopting and sharing popular hashtags or memes, military and political entities can infiltrate civilian discourse, embedding their narratives into everyday communication. This integration emphasizes the importance of narrative design in leveraging social networks for influence, as emotionally resonant symbols can reinforce specific agendas while appearing organic. Barthes’ (1972) concept of myth-making further supports this view, suggesting that media symbols serve as “myths” that convey cultural values and norms through emotionally charged language and imagery. The convergence of military and civilian communication strategies within social networks creates a hybrid discourse that reshapes perceptions of reality. Dean (2010) critiques this blending, noting that it undermines the traditional distinctions between information dissemination and manipulation, ultimately eroding trust in digital communication. Similarly, Wardle and Derakhshan (2017) argue that the strategic framing of information on social media compromises users’ ability to distinguish fact from propaganda. This hybridization not only challenges media literacy but also exposes audiences to the risks of manipulation through the symbolic framing of events and issues.

Greater transparency and regulatory oversight are essential to address the ethical and social risks associated with symbolic language and narrative power. Platforms must ensure that users can differentiate between genuine expressions of public sentiment and orchestrated campaigns. Additionally, fostering digital literacy to critically evaluate the narratives embedded in hashtags and memes will empower users to navigate the complexities of hybrid media spaces. As symbolic language continues to shape collective identities and influence global perceptions, understanding its mechanisms and implications remains critical for safeguarding public trust and promoting ethical digital communication.

Social networks as a hybrid media space

Social networks have evolved from platforms primarily intended for personal communication into essential mediums for sharing a wide range of information, including military and political strategies. This transformation has created hybrid media spaces where civilian and military objectives intersect, forming a unique ecosystem capable of influencing user opinions, perceptions, and behaviors (Chadwick, 2017). This convergence highlights the dual role of social networks, which cater to civilian engagement while also serving as tools for strategic influence in military contexts. In civilian applications, social network algorithms are mainly designed to enhance user experiences and increase engagement. They curate content that aligns with individual preferences, driving user interaction and extending the time spent on the platform (Pariser, 2011). However, in military contexts, these same algorithmic principles are repurposed to spread propaganda and disinformation. Algorithms in this realm target audiences based on their psychological and emotional profiles, amplifying content intended to elicit strong reactions such as fear, anger, or patriotism to achieve specific strategic objectives (Zuboff, 2019). This overlap demonstrates how military goals, like information control, parallel civilian aims, such as audience retention, and highlights how algorithms can disseminate manipulative content.

For instance, algorithms that were originally designed to deliver targeted advertisements can also facilitate the spread of propaganda aimed at shaping public opinion in favor of particular political or military agendas. This is accomplished through “framing” techniques, in which content is presented in a seemingly objective manner while aligning with specific narratives (Entman, 1993). By employing framing strategies, social networks create echo chambers—self-reinforcing environments where users are exposed only to information that confirms their existing beliefs. These echo chambers can intensify societal polarization, as individuals perceive their curated content as credible and authoritative (Pariser, 2011). In this context, emotionally charged content proves to be especially powerful, as it elicits strong responses that resonate with both military strategies of influence and civilian goals of engagement (Chadwick, 2017). Such dynamics are apparent in disinformation campaigns during political elections or conflicts. For example, algorithms have been used to negatively frame opponents by distributing tailored propaganda, effectively swaying public opinion while presenting the content as unbiased news (Wardle & Derakhshan, 2017). This dual functionality of algorithms underscores the blurred boundaries between civilian and military applications, necessitating a nuanced understanding of their ethical implications and societal impact.

Ethical and social implications of entanglement

The blending of military and civilian theories within social networks has significant implications for political attitudes, societal cohesion, and ethical standards. Military techniques that manipulate public perception in civilian environments often exacerbate polarization and amplify extreme viewpoints. Repeated exposure to content that aligns with users’ existing beliefs distorts political realities, deepens societal divisions, and fosters ideological rigidity. These dynamics are especially pronounced during election campaigns, where algorithmic targeting of political messages exploits individuals’ psychological vulnerabilities. Moreover, incorporating military strategies into civilian media shapes social norms and values through manipulative content, exposing users to narratives—such as patriotism or perceived external threats—that align societal values with specific agendas. The manipulative techniques employed on social networks have significant psychological effects, leading to heightened feelings of fear, uncertainty, and doubt about information credibility. Social media algorithms are often designed to prioritize emotionally charged content, provoking audience reactions and increasing user engagement. However, prolonged exposure to such content can create an atmosphere of uncertainty and skepticism, leaving users in a state of cognitive dissonance and questioning their perception of reality (Kahneman, 2011). As a result, social networks influence user behavior and significantly affect users’ emotional states.

The convergence of military and civilian media theories in social networks raises critical ethical concerns, particularly regarding privacy, psychological well-being, and information integrity. As social networks become more pervasive, distinguishing between content generated for commercial purposes and that which is designed to manipulate perceptions in support of political or military agendas becomes increasingly challenging. This ambiguity introduces significant ethical dilemmas that necessitate close scrutiny. A central issue is the infringement on user privacy. Algorithms that monitor behavior and categorize individuals based on their interests enable the precise targeting of military and political messages, often without users’ awareness or consent.

Pariser (2011) highlights that the personalization of content creates “echo chambers,” restricting freedom of thought and choice by presenting users with information that reinforces their existing beliefs. In a military context, these echo chambers facilitate the targeting of specific demographic groups with tailored narratives that covertly and systematically shape opinions. Exploiting personal data for military and security operations underscores the ethical responsibilities of social media platforms. Companies wield significant power over user information, and manipulating this data to shape public perception raises concerns about adherence to ethical principles. Using personal data for propaganda infringes on users’ right to informed consent and erodes trust in these platforms (Zuboff, 2019). The merging of military and civilian tactics on social networks has significant psychological implications. Users are often exposed to content designed to provoke strong emotional reactions, such as anger or fear. Kahneman (2011) explains that these techniques impair users’ ability to make rational decisions, which ultimately affects their attitudes and behaviors.

Prolonged exposure to manipulative content can lead to emotional exhaustion, a growing issue linked to rising levels of anxiety and depression, particularly among younger users (Anderson & Jiang, 2018). When psychological techniques are incorporated into military strategies, social networks transform into instruments for psychological operations, undermining users’ emotional well-being and increasing their susceptibility to manipulation. The prevalence of disinformation on these platforms further complicates the situation, jeopardizing the credibility of information and fostering widespread distrust in traditional media institutions. Wardle and Derakhshan (2017) define disinformation as “the intentional dissemination of false information to manipulate public perception.” Such manipulation erodes trust in media and democratic processes as users struggle to distinguish between falsehoods and authentic information. This destabilization of public opinion undermines democratic mechanisms, leading to increasing hesitance to trust diverse sources of information (Chadwick, 2017). The widespread circulation of misinformation and manipulative content also diminishes users’ critical thinking abilities, making them more vulnerable to simplistic or extreme narratives. Goffman’s (1974) framing theory highlights how the presentation of information shapes perceptions and serves specific agendas. Social networks exacerbate this issue by curating content that aligns with algorithms designed to reinforce existing biases. Consequently, disinformation and framing become powerful tools that erode public trust and shape opinions that threaten an open society’s foundational values.

A significant consequence of the convergence between military and civilian strategies on social networks is the weakening of democratic processes and social cohesion. When platforms employ military techniques for political purposes, they foster polarization and destabilization within communities. Gunitsky (2015) argues that manipulating social networks for political objectives disrupts democratic norms and intensifies societal divisions. Exposure to emotionally charged content that incites fear or anger toward specific groups leads to the fragmentation of social communities and reduces tolerance for differing viewpoints. This dynamic has profound implications for democracy, resulting in a “spiral of silence,” where individuals hesitate to express their opinions due to fear of conflict or social disapproval (Noelle-Neumann, 1974). Consequently, extreme viewpoints can dominate public discourse, marginalizing diverse perspectives and undermining the principles of pluralism and open dialogue. These trends erode social cohesion and weaken the foundations of a democratic society. The convergence of military and civilian tactics within social networks requires urgent attention to the ethical and social challenges it presents. Using military strategies to influence public opinion on civilian platforms infringes on user privacy, autonomy, and psychological well-being, leading to long-term repercussions for democratic processes and social unity. Addressing these risks necessitates the development of ethical guidelines and regulations to limit the deployment of military tactics on social networks. Such measures are essential for preserving information integrity and encouraging critical reflection among users, safeguarding democratic values and societal cohesion.

Conclusion

In the evolving digital landscape, social networks have shifted from being mere platforms for personal connection to becoming hybrid arenas where military and civilian media strategies converge. This convergence raises significant social and ethical concerns. While these platforms facilitate interpersonal communication, they increasingly function as tools for propaganda, information operations, and the manipulation of public perception. The merging of military and civilian media creates challenges in distinguishing genuine information from manipulation, with serious consequences for democratic processes, societal norms, and users’ psychological well-being. The interaction between civilian and military strategies on social networks is exemplified by the use of algorithms. Originally designed to enhance user engagement through personalized content, these algorithms have been appropriated for military purposes, enabling targeted outreach to specific demographic groups with tailored content and propaganda. Pariser (2011) notes that “filter bubbles” emerge as algorithms selectively deliver information that aligns with users’ preexisting beliefs, isolating them from alternative viewpoints. This segmentation not only reinforces biases but also heightens the psychological impact of manipulative content, limiting users’ capacity for critical thinking and objective assessment of information.

Framing techniques and disinformation further undermine the credibility of information essential for democracy. As defined by Entman (1993), framing manipulates perceptions of reality by highlighting particular aspects of a narrative while omitting others. When combined with disinformation, especially within algorithmically generated echo chambers, users increasingly struggle to differentiate authentic content from falsehoods. This manipulation erodes trust in information sources, exacerbates societal polarization, and undermines social cohesion. Wardle and Derakhshan (2017) emphasize the dangers of misinformation, particularly its role in weakening democratic foundations by impairing the public’s ability to distinguish truth from propaganda. The psychological effects of exposure to manipulative content are profound. Emotionally charged material, whether rooted in military disinformation or civilian marketing, provokes reactions such as fear, anger, and insecurity, which can detract from rational decision-making. Kahneman (2011) points out that targeted manipulation of perceptions can significantly influence attitudes and behaviors, while Gunitsky (2015) notes its destabilizing effects on political processes and societal unity. By embedding military narratives into civilian digital contexts, social networks contribute to heightened polarization, entrench extreme viewpoints, and undermine democratic ideals of inclusivity and open dialogue.

The blending of military and civilian strategies on social networks poses a considerable threat to democratic values. The deliberate use of military manipulation techniques in civilian contexts limits users’ access to diverse and authentic information, stifles open discourse, and intensifies polarization. As trust in information sources and digital platforms wanes, democratic participation declines. Noelle-Neumann’s (1974) spiral of silence theory illustrates how exposure to one-sided narratives can discourage individuals from expressing dissenting opinions, further diminishing the diversity of perspectives vital for a thriving democracy. Consequently, social networks increasingly host fragmented and controlled narratives, straying far from their original purpose as open platforms for free expression and information exchange. This hybridization of military and civilian strategies on social networks requires urgent ethical and regulatory interventions to combat algorithmic manipulation and disinformation and protect public perception. Establishing robust ethical guidelines is essential to preserving the integrity of these platforms as spaces for genuine interaction and information sharing. Zuboff (2019) underscores the need to safeguard user privacy and prevent the exploitation of personal data for manipulative purposes. Regulatory frameworks should promote transparency, limit algorithm misuse, and empower users to navigate digital spaces without undue influence. By prioritizing these measures, society can ensure that social networks positively contribute to democratic values, foster informed public discourse, and protect individual autonomy in an increasingly complex digital age.

References

- Allcott H, Gentzkow M. Social media and fake news in the 2016 election. Journal of Economic Perspectives. 2017;31(2):211-36.

- Anderson M, Jiang J. Teens, social media & technology 2018.

- Australian Federation of Ukrainian Organizations (AFUO). Advocacy and community mobilization for Ukraine [Internet]. 2022. Available from: https://www.ozeukes.com

- Bakshy E, Messing S, Adamic LA. Exposure to ideologically diverse news and opinion on Facebook. Science. 2015;348(6239):1130-2.

- Barthes R. Mythologies. Translated by Lavers A. New York: Hill and Wang; 1972.

- Benkler Y, Faris R, Roberts H. Network propaganda: Manipulation, disinformation, and radicalization in American politics. Oxford: Oxford University Press; 2018.

- Bennett WL, Segerberg A. The logic of connective action: Digital media and the personalization of contentious politics. Information, Communication & Society. 2012;15(5):739-68.

- Castells M. Networks of outrage and hope: Social movements in the Internet age. John Wiley & Sons; 2015.

- Chadwick A. The hybrid media system: Politics and power. Oxford: Oxford University Press; 2017.

- Chesney B, Citron D. Deep fakes: A looming challenge for privacy, democracy, and national security. California Law Review. 2019;107:1753.

- Cinelli M, Quattrociocchi W, Galeazzi A, Valensise CM, Brugnoli E, Schmidt AL, et al. The COVID-19 social media infodemic. Scientific Reports. 2020;10(1):1-10.

- Corman SR, Schiefelbein J, Acheson K, Goodall B, Mcdonald K, Trethewey A. Communication and media strategy in the jihadi war of ideas. Tempe, AZ: Consortium for Strategic Communication, Arizona State University; 2006.

- Dean J. Blog Theory: Feedback and Capture in the Circuits of Drive. Polity; 2010.

- Diakopoulos N. Accountability in algorithmic decision making. Communications of the ACM. 2016;59(2):56-62.

- Freedman L. The transformation of strategic affairs. Routledge; 2017.

- Entman RM. Framing: Toward clarification of a fractured paradigm. Journal of Communication. 1993;43(4):51-8.

- European External Action Service (EEAS). Disinformation resilience during the Russia-Ukraine conflict: Strategies and narratives [Internet]. 2022. Available from: https://eeas.europa.eu

- Gillespie T. The relevance of algorithms.

- Gillespie T. Custodians of the Internet: Platforms, content moderation, and the hidden decisions that shape social media. Yale University Press; 2018.

- Goffman E. Frame analysis: An essay on the organization of experience. Northeastern University Press; 1974.

- Gunitsky S. Corrupting the cyber-commons: Social media as a tool of autocratic stability. Perspectives on Politics. 2015;13(1):42-54.

- Hall S. Representation: Cultural representations and signifying practices.

- Jowett GS, O’Donnell V. Propaganda & persuasion. Sage Publications; 2018.

- Kahneman D. Thinking, fast and slow. Farrar, Straus and Giroux; 2011.

- McIntyre L. The hidden dangers of fake news in post-truth politics. Revue Internationale de Philosophie. 2021;75:113-24.

- NATO Strategic Communications Centre of Excellence & VOX-Pol. Social Media as a Tool of Hybrid Warfare [Internet]. 2016. Available from: https://stratcomcoe.org

- Noelle-Neumann E. The spiral of silence: A theory of public opinion. Journal of Communication. 1974;24(2):43-51.

- Pariser E. The filter bubble: What the Internet is hiding from you. Penguin UK; 2011.

- Ricci F, Rokach L, Shapira B. Recommender systems: Techniques, applications, and challenges. Recommender Systems Handbook. 2021;1-35.

- Sunstein C. com. Princeton University Press; 2001.

- Sunstein CR. #Republic: Divided democracy in the age of social media. Princeton University Press; 2018.

- Total Military Insight. The Role of Social Media in Hybrid Warfare Strategies [Internet]. Available from: https://totalmilitaryinsight.com

- Tufekci Z. Algorithmic harms beyond Facebook and Google: Emergent challenges of computational agency. Colorado Technology Law Journal. 2015;13:203.

- Tufekci Z. Twitter and tear gas: The power and fragility of networked protest. Yale University Press; 2017.

- Vosoughi S, Roy D, Aral S. The spread of true and false news online. Science. 2018;359(6380):1146-51.

- Zuboff S. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. Public Affairs; 2019.

- Wardle C, Derakhshan H. Information disorder: Toward an interdisciplinary framework for research and policymaking. Council of Europe. 2017;27:1-107.

- World Health Organization. Laboratory testing for 2019 novel coronavirus (2019-nCoV) in suspected human cases: Interim guidance, 17 January 2020. World Health Organization.